University of Pennsylvania, CIS 565: GPU Programming and Architecture, Final Project

This projects implements a real-time texture editing approach that is largely engine-agnostic. The framework is designed to be easily integrated into existing games and provides a web-based user interface for texture editing. The web interface is rendered off-screen into a bitmap using Chromium Embedded Framework (CEF) and is then sent to the game via CUDA Inter-Process Communication (IPC) for display. A DirectX 11 implementation and a DirectX 12 proof of concept are provided, but the framework can be easily adapted to other graphics APIs. The DirectX 11 implementation uses Grand Theft Auto V and makes use of certain game specific features, such as a free camera with entity selection to allow for a better user experience. For DirectX 12, a basic proof of concept is provided that demonstrates the framework's engine-agnostic capabilities where selection is done via the keyboard and no game-specific features are used.

Real-time low latency texture editing of a third party game (GTA 5) using our framework on an iPad

The primary motivation is to foster creativity and enable users to create their own content for games without having to worry about game formats, file protections and other issues. Just pick an object and start drawing! These custom worlds could then also be shared with other players through persistence mechanisms, allowing a truly universal and collaborative modding experience.

The project is divided into four milestones, each of which is described in more detail below. The milestones are ordered in a way that allows for a gradual development of the framework, starting with a proof of concept and ending with a polished product. The first milestone focuses on the initial proof of concept and the integration of the framework into Grand Theft Auto 5. The second milestone builds on the first one and adds the capability to read back textures from the GPU and a web application for texture editing. The third milestone focuses on making the framework more engine-agnostic and provides a proof of concept for DirectX 12. The final milestone adds persistence and focuses on performance optimization. Milestone 3 also included a demo for mobile authoring on iOS using a progressive web app (PWA) to show off the framework's capabilities on mobile devices as well as its low latency.

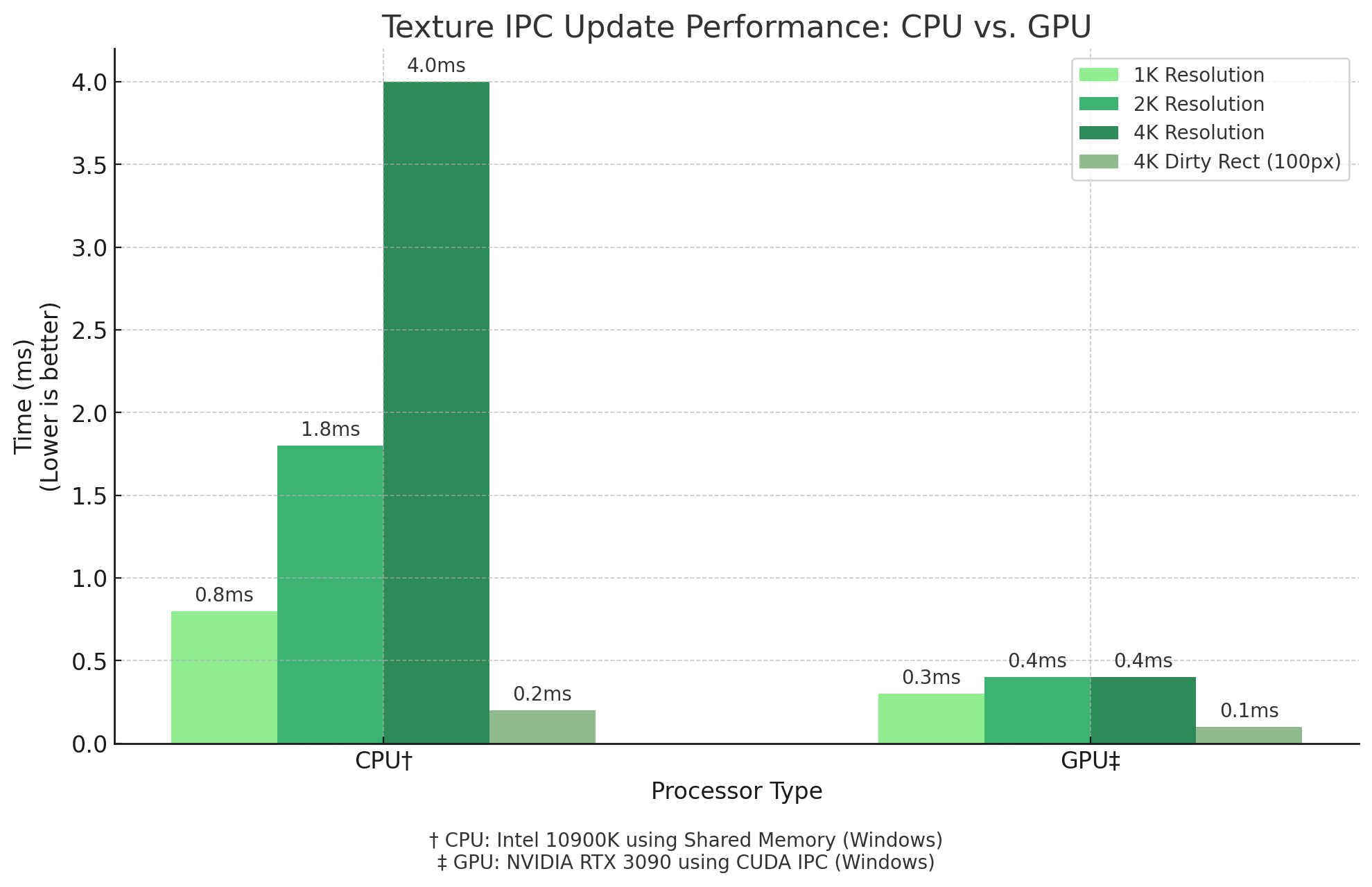

Replacing a classic CPU shared memory IPC approach with CUDA IPC yielded raw performance gains of up to 100x times faster copying times and still resulted in roughly 10x faster real-world gains. At 4K render resolution, we can now update our texture in 0.4ms down from roughly 4ms! The difference stems from the overhead associated with mapping and unmapping resources which is still necessary for the CUDA implementation. The primary challenge for this milestone was the CUDA integration for GTA 5. It seems that the render state is not compatible with CUDA and trying to unmap a DirectX resource from CUDA always fails. The solution was to patch an internal CUDA call that led to the error code being propogated to the caller. This allowed the resource to be unmapped successfully. In the future, a cleaner solution should be found that does not require patching internal calls.

The video below shows a WebGL demo rendered at 60 FPS as an overlay on top of GTA 5. The demo is rendered off-screen using Chromium Embedded Framework (CEF) and then sent to the game via CUDA IPC for display.

High-frequency texture updates are no problem

Lastly, a free camera was implemented to allow for entity selection. The camera can be controlled using the mouse and keyboard and allows the user to select any entity in the game world. This is game specific and improves the user experience, but is not necessary for the framework to work. The video below shows the free camera in action.

Free camera in GTA to allow entity selection (game specific)

The primary challenge for this milestone was the texture read-back and more specifically, identifying the correct texture. In the case of Grand Theft Auto 5, we use some game specific data to help us find the textures associated with a given mesh. However, the approach would also work without game specific data, but it would require the user to manually select the correct texture and the texture selection would most likely include more unrelated textures.

Texture selection UI supporting all textures bound to the current mesh

Once the texture is identified, we can use the DirectX 11 resource pointer that it is associated with to read back the texture data. We map the texture, copy its data to a CPU-buffer and unmap it. The CPU-buffer is then sent to the web application for editing. Once the user is done editing, the texture is sent back to the game and the game's texture is replaced with the edited version. The image below shows the web application for texture editing. For objects with multiple textures, we allow the user to pick which texture to edit. The web application is hosted on a small web server that is also responsible for broadcasting texture updates to the game.

Milestone 3 was a research milestone that investigated two main goals: Can the user experience for texture selection be improved by leveraging vertex buffer data? And: Can the framework be made more engine-agnostic?

Both questions were answered with a yes. The video below shows the result of the vertex buffer research. The vertex buffer for our selected entity is used to determine which texture to edit. Please note that this uses game specific data and hence the vertex buffer is actually read before being sent to the GPU. A more engine-agnostic approach might have to work slightly differently. However, the video shows that the approach works and that it is possible to improve texture selection accuracy by leveraging vertex buffer data. We can interesect a ray with the vertex buffer and then use the intersection point to identify the sub-mesh that was hit. This allows for fine-grained texture selection and is a significant improvement over the previous approach.

Reading the vertex buffer for an entity allows for fine-grained texture selection

Goal number two was also achieved. The framework was made more engine-agnostic by removing game specific code and replacing it with more generic code as well as porting it to DirectX 12. We used the Helios DirectX 12 renderer for our tests. Since we do not want to use any game specific code, we cannot easily select entities. Instead, we developed a new strategy: We intercept all draw calls (such as DrawIndexedInstanced) and compare the primitive count against a threshold. This threshold can be modified using the keyboard. Depending on whether the primitive count is above or below our threshold, we color the draw call differently.

An image of a possible initial state can be seen below.

Coloring draw calls depending on primitive count

This allows us to quickly binary search for the correct draw call and hence the correct texture. The video below shows the result of this approach. The user can use the keyboard to modify the threshold and hence the coloring of the draw calls. Once the primitive index for the draw call is close to the current threshold, it turns green. Now, the user can start editing the texture. This example shows a texture replacement with the previously used OpenGL demo to highlight the high performance of the framework.

Picking the mesh using binary search on the primitive count

Lastly, we developed a progressive web app that bundles the editor and works on iOS. It hightlights the flexibility and low latency of our framework.

Real-time low latency texture editing of a third party game (GTA 5) using our framework on an iPad (full video)

The final milestone focused on two main goals: Persistence and performance. For persistence, we implemented a simple file-based approach that allows us to quickly load modified textures from disk on game startup (only for GTA 5) and hence persist the changes in the game world. This doubles as an export/sharing functionality that allows users to share their creations with other players.

To further improve performance, we implemented a simple "dirty rectangle" mechanism that only sends changed pixels to the GPU instead of the entire texture. This significantly reduces the amount of data that needs to be sent and improves performance. However, our current implementation is largely bottlenecked by the mapping and unmapping, so this would only really benefit textures that are large and have only a few pixels changed. For smaller textures, the overhead of mapping and unmapping is too large to see any significant performance gains.

CUDA IPC vastly outperforms the CPU implementation at high resolutions

As a small surprise, since not announced last week, we leveraged the improved performance and demoed our progressive web app on an Oculus Quest 3. The demo was recorded using the Oculus Quest 3's built-in screen recording functionality as well as the screen sharing functionality which highlights the low latency of our framework. The video below shows the demo in action. It uses an internet connection instead of a local server to underline the framework's flexibility and responsiveness.

Runs anywhere: Using the Quest 3 (thanks to Dr. Lane and Meta for the donation) to edit in Virtual Reality!

The special surprise for our final milestone is a vertex buffer export functionality that allows us to export all meshes in the vertex buffer to a GLTF file. This file can then be viewed in the browser or in immersive AR/VR experiences. The video below shows the export functionality in action. We also added a simple server communication that synchronizes the vertex buffer data between the game and the web application so that meshes in the game world that are moving are also updated in the web application. This allows for a truly immersive experience where the user can see the game world in the browser. Future work could investigate also sending over texture and shader data to allow for a complete editing experience outside of the game. Maybe over winter break...

Future Work: Interacting with a live vertex buffer view will make for a truly immersive editing experience